As a young student, I was taught that mathematics is the language of physics. While largely true, one also cannot communicate in CMS at the CERN LHC without learning a plethora of acronyms. When we wrote the CMS Trigger and Data Acquistion System (TriDAS) Technical Design Report (TDR) in year 2000, we included an appendix that contained a dictionary of 203 acronyms from ADC to ZCD, quite necessary to digest this document. In the next years, the list of acronyms would grow exponentially. We even have nested acronyms, LPC, for example standing for LHC Physics Center. In a talk of many years ago, one of my distinguished collaborators flashed a clever new creation and quipped “I believe this is the first use of a triply-nested acronym in CMS.” I do not know if since then we have reached quads or quints. Somehow it would not surprise me.

One of the latest creations is YETS: Year End Technical Stop, referring to the period between the end of the heavy ion run on 7 December 2011 and the restart of LHC operations due to begin next week with hardware commissioning leading ultimately to pp collisions in April. So what to physicists do during YETS? A lot as it turns out!

One of the major activities is how to cope with the projected instantaneous luminosity of 7e33 (per cm**2 per s). This luminosity will likely come with a 50 nanosecond beam structure (the time between collisions) as was used in 2011. This means that the average number of pp interactions per triggered readout will be about 35, the one you tried to select with the trigger, plus many more piled on top of it. This affects trigger rates and thresholds, background conditions, and the algorithms used in the physics analysis. In addition, we shall likely run at 8 TeV total energy (compared to 7 last year). These new expected conditions are being simulated, a process requiring a huge amount of physicist manpower and computing resources. The results are carefully scrutinized in collaboration-wide meetings. That is the “glory” activity.

Besides the glory work, there is also a huge amount of technical service work, both hardware and software. At CMS in Point 5 (P5) we have observed beam-induced pressure spikes (rise and fall) in the vacuum. The pumping required for recovery is using up the supply of non evaporable getter (NEG) needed to achieve ultrahigh vacuum (UHV). The UHV in turn is needed to ensure that the beams do not abort which nearly happened last year. A huge effort was launched to radiograph the region in question to see if the same problems of drooping radio frequency (RF) fingers are present as has been observed in other sectors. An electrical discharge from the RF fingers can possibly cause the UHV spikes. Also at P5 work will be done on the zero degree calorimeter (ZDC), the Centauro And Strange Object Research (CASTOR) detector (not to be confused with CERN Advanced Storage Manager), the cathode strip chamber (CSC), the restive plate chamber (RPC) and the drift tube (DT) muon detectors which are accessible without opening the yoke of CMS. In addition, there is maintenance of the water cooling and rewiring of the magnet circuit breaker.

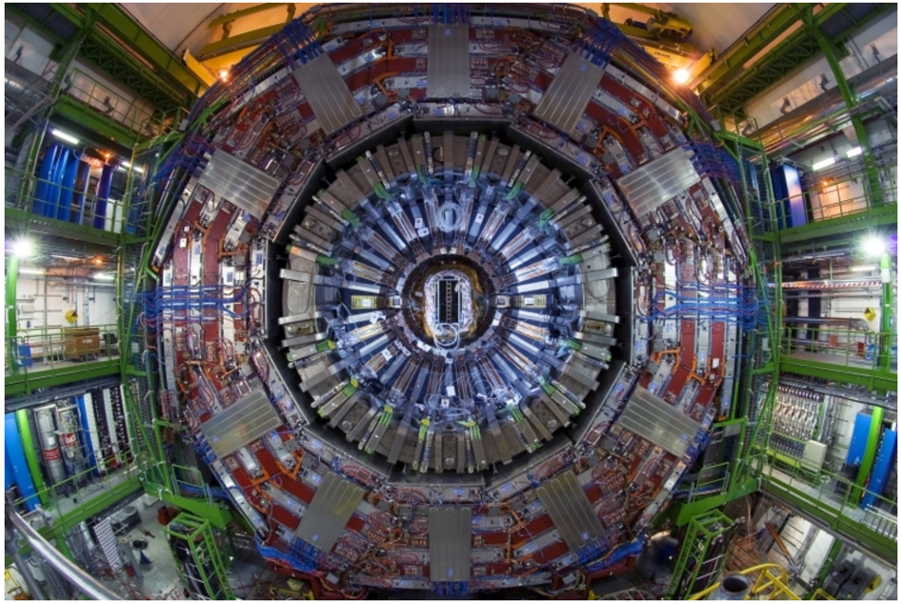

Each of the CMS subsystems has work to do as evident by a recent a trip into the P5 pit. The detailed activities of the pixel (PX), silicon tracker (TK), electromagnetic calorimeter (ECAL), and muon (MU) subdetectors are beyond the scope of this blog. I can give you some idea of what is going on with the hadron calorimeter (HCAL), where a bit of the details are fresh in my mind.

The HCAL activities are quite intense. Detector channel-by-channel gains, the numbers that are needed to convert electrical signals into absolute energy units can vary with time for a variety of reasons (e.g. radiation damage) and need periodic updating. This information has to go into the look up tables (LUTs) that are used by the electronics to provide TPGs (trigger primitive generation) which are in turn used by the level-1 hardware trigger to select events. If these numbers in the LUTs are slightly off, then the energy threshold that we think we are selecting is off target which is very bad because trigger rates vary exponentially with energy.

The HCAL uses 32 optical S-LINKs (where the S stands for simple, although I don’t remember anything simple about getting it to work) to send the data to DAQ computers. My group at Boston designed and built the front end driver (FED) electronics that collects and transmits the data on these links. The data transmission involves a complex buffering and feedback system so that the data flow can be throttled without crashing in case something goes wrong. The data flow reached its design value of 2 kBytes per link per event at the end of 2011 so we are going to reduce the payload by eliminating some redundant data bits which were previously useful for commissioning the detector but are no longer needed. This will allow us to comfortably handle the expected increase in event size due to increased pileup. Also 4 of our boards developed dynamic random access memory (DRAM) problems after a sudden power failure which took up two days of my time at CERN to inventory spares, isolate the affected DRAMS, and arrange for repairs.

The HCAL computers at P5 are running 32 bit Scientific Linux CERN (SLC4, another nested acronym). While we enjoyed the stability of this release over a number of years, it will no longer be supported by CERN after February 2012. These computers are being upgraded (as I write this!) to 64 bit SLC5.

The HF calorimeters will have their photomultiplier tubes (PMTs) replaced in the LS1. We would like to do measurements with a few new PMTs in order to study performance stability and aging in the colliding beam environment. This activity requires building and testing new high-voltage (HV) distribution printed circuit boards (PCBs). The HV PCBs require testing and installation in the current HF read out boxes (ROBOXs) while there is still access to the detector.

Our group at Boston in also involved with designing electronics needed for the HCAL upgrade, the first part of which will take place in the first long shutdown (LS1). The new electronics is based on micro telecommunications computing architecture (uTCA). In Boston we have built a uTCA advanced mezzanine card for the unique slot number 13 (AMC13). This card will distribute the LHC clock signals needed for trigger timing and control (TTC) as well as serve as the FED. We plan to test these cards during the 2012 run. To prepare for these tests we have installed an AMC13 card in the central DAQ (cDAQ) lab which can transmit data on optical fibers to a multi optical link (MOL) card which exists in the form of a personal computer interface (PCI) card that can be readily attached to a computer. I addition, to be able to perform the readout tests with the new electronics without interrupting the physics data flow, we have installed optical splitters on the HCAL front end digital signals for a portion of the detector, parts of the HCAL barrel (HB), HCAL end cap (HE), and HCAL forward (HF), so that one path can be used for physics data and the other path for uTCA tests.

I can assure you that the activities in parts of CMS are (almost) as intense as during physics runs. There has been a lot to do!

I once met a secretary in California, the land of innovative thinkers, who was exposed to physics through typing exams, that could not understand why students thought physics was so hard. She thought each letter always stood for the same thing and once you learned them you were pretty much set. I am not sure she believed me when I told her there weren’t enough letters to go around. Same thing with acronyms. A quick search for CMS will include: Center for Medicare & Medicaid Services (a nested acronym), Content Management System, Chicago Manual of Style, Chronic Mountain Sickness, Central Middle School, City Montessori School, Charlotte Motor Speedway, Comparative Media Studies, Central Management Services, Convention on Migratory Species, Correctional Medical Services, College Music Society, Colorado Medical Society, Cytoplasmic Male Sterility, Certified Master Safecracker, Cryptographic Message Syntax, Code Morphing Software, Council for the Mathematical Sciences, Court of Master Sommeliers, and my own favorite, a neighborhood landscaper Chris Mark & Sons, of which am proud owner of one of their shirts.

And for those against acronym abuse, you can buy an AAAAA T-shirt (maybe I will too):

Thanks to Kathryn Grim for suggesting a blog about what goes on at an LHC experiment during shutdown.

James Rohlf Originally posted on Quantum Diaries

- Log in to post comments