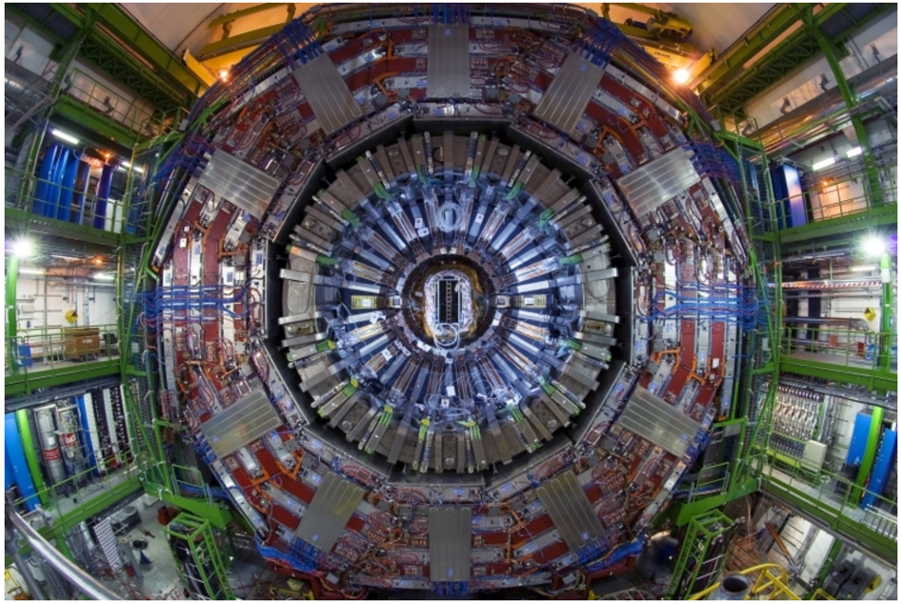

The LHC provides CMS with millions of collisions each second, and a wealth of physics data is obtained from the proton-proton interactions. In order to record all these data for analyses, all CMS sub-detectors have to be performing optimally. If you realise that there are problems, the sooner you find and fix the problem, the better the overall quality of your data is. With the LHC operating under new conditions in 2012, colliding more bunches of protons together in a tighter beam of particles, CMS has to ensure that the hardware as well as the software can cope with the challenges and retain the high quality of data that have been acquired so far. Towards this, CMS has reorganised the group responsible for scrutinising the quality of the data, forming a new group, called “Physics Performance and Dataset (PPD)”. “The role of PPD is to ensure the quality of data that are provided to the physics analysis groups, both while recording the data during collisions, as well as after the data have been processed and stored,” says Lucia Silvestris, the coordinator of the new group. Only a tiny fraction of the data generated inside the CMS detector are selected by the Trigger system for storage. After the Trigger picks the data worth storing, they are sent to the computing centre, where the reconstruction machines prompt-reconstruct the data. (Reconstruction: CMS lingo for putting together the various pieces of information from the different sub-detectors to get a coherent image of each collision.) Of course, a subset of the data is also analysed by PPD’s Data Quality Monitoring team in the CMS Control Room. The person on DQM shift provides immediate feedback to the shift leader and CMS Run Coordination on the quality of the data by looking at histograms, monitoring plots and event displays in real-time (i.e. Online). A second shift team, called Offline Data Quality Monitoring, is located at the CMS Centre at CERN, and performs a more refined analysis of the prompt-reconstructed data. If a problem is spotted, the data are flagged as “bad for analysis” and the team tries to understand what caused the problems. However, these data may be recovered either in the same week, following consultation with the detector teams and Physics Object Groups, or at the time of re-processing the data, depending on the origin of the problems. Data used for analysis should be also properly calibrated. The PPD group works with the detector and physics object groups to provide the proper calibration and alignment constants. PPD also validates and signs off on the production releases of the physics code that can then be used for producing simulations, prompt-reconstruction as well as data re-processing. The LHC is ready to start the 2012 run in a few days, and the CMS PPD group is all set to tackle the challenges it will present.

- Log in to post comments