The spring joint offline and computing week was held at CERN March 25-28 (the full agenda can be found in Indico). The main theme of the week was the readiness of the CMS computing infrastructure and the CMSSW software for the 2015 startup. The range of topics was very wide, covering the 2014 Computing Milestones, the CSA14 challenge, the status of on-going geometry developments for the CMS detector of the year 2015, the definition of “analysis trains” for automating analysis tuple production, simulation and reconstruction readiness for 2015, effective use of disk storage, managed Tier-1 disks with a full separation between disk and tape resources, full deployment of data federations worldwide, the roll out in production of the new version of our distributed analysis tool (CRAB 3), shared workflows and distributed prompt reconstruction. Here, we describe a few topics of particular interest in more detail.

The use of a multi-threaded CMSSW reconstruction application is essential for both online and offline operations during 2015. A multi-threaded application brings large benefits, both in terms of memory usage and of the time required to run an application over a complete data sample, e.g. reconstructing all events for a lumisection within a primary dataset. Substantial work has gone into the development of a multi-threaded framework, and CMS is the first LHC experiment to have completed such a framework. We are now focused on the changes to reconstruction algorithms needed to efficiently utilize this framework. Our short-term goals are to update sufficient algorithms in order to reach greater than 90% efficiency before the CMSSW_7_1_0 release. We can then use this application for scale testing as a function of the number of cores devoted to a single CMSSW job executing on the GRID or on the HLT farm. By adapting even more code to run correctly in a multi-threaded framework we should be able to increase the overall efficiency of our data processing. These additional improvements are targeted for the CMSSW_7_2_0 release in the fall.

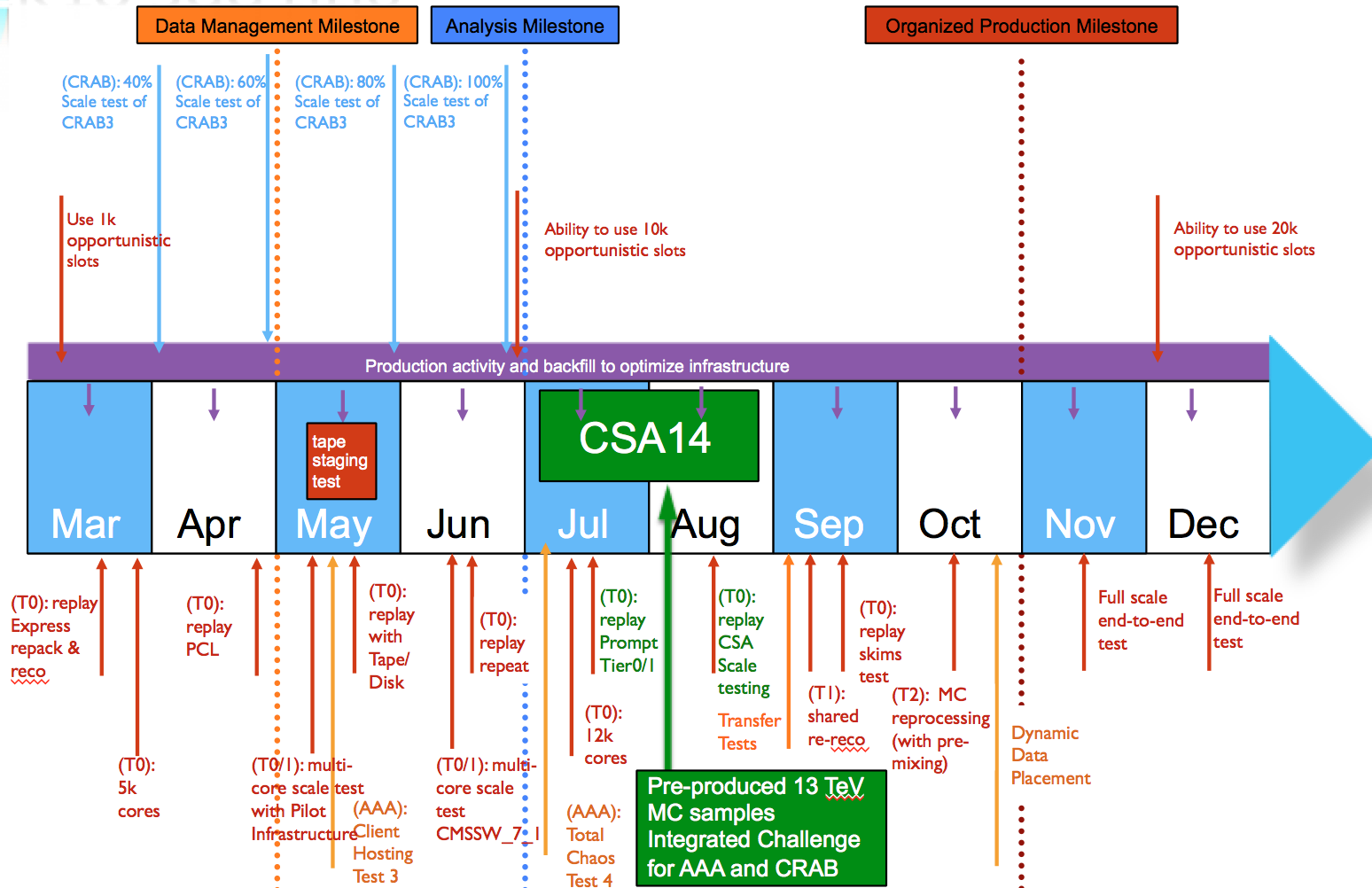

Computing has been assessing the status of the three major milestones in 2014, i.e. the Data Management milestone (end of April 2014), the Distributed Analysis milestone (end of June 2014) and the Organized Processing milestone (end of October 2014). In terms of Data Management, progress is visible on the disk-tape separation at the Tier-1 site, that will increase our ability to flexibly and efficiently use our Tier-1 resources: CNAF, CC-IN2P3, JINR, KIT, PIC and RAL have finalized the separation, while CERN and FNAL are finishing soon. The deployment of data federations is proceeding at a very good pace: file-open and file-reading tests are being run, and a full-scale test is foreseen to take place during CSA14, during which the data federation will be used at the scale that is envisioned for the real system, with as many sites as possible involved. In terms of Distributed Analysis, the goal for June is to demonstrate the full production scale of the new CRAB3 distributed analysis system. The program of work is on track: as pre-warned, we will soon involve the overall community, as we need the involvement and active participation of power users from Physics during monthly scale test before CSA14, as well as during the challenge itself. In terms of Organized Processing, one key discussion item was the good progress on the HLT farm usage for offline processing. The availability of the HLT resources for reprocessing is mandatory in Run-2, and an intense program started in 2013. Since then, HLT has already participated successfully in official reprocessing campaigns, and this is now smoothly turning into routine operation. The CERN-P5 network link was upgraded to 60 Gbps (x3 with respect to 2013), which will allow overcoming some limitations in the maximum number of jobs to be run in parallel. Work is also going on to prepare the HLT farm for inter-fill usage (establishing protocol to start/shutdown machines, investigating check-pointing or machine suspension, etc). Other areas of work are the promt-reco workflow optimization, access to opportunistic resources, exploitation of multi-core enabled applications and resource allocation.

A first proposal for a new “mini-AOD” format, targeting around 10% of the size of the current AOD, was presented. This format is targeted at having sufficient information to serve about 80% of CMS analysis, while dramatically simplifying the disk and I/O resources needed for analysis. Such large reductions can be achieved using a number of techniques, including defining light-weight particle-flow candidates, increasing pT thresholds for keeping physics-object candidates, and reduced numerical precision when it is not required. A first version of this format is targeting the CMSSW_7_1_0 release, however work will continue towards a final definition of both the AOD and mini-AOD in the fall.

Beyond the focus on 2015, there are new research and development efforts aimed at understanding the most effective computing model for CMS reconstruction and analysis. The overall goal is to ensure that CMS can continue to make cost efficient use of computing resources as they evolve in the future, and also to evaluate potential “game-changing” approaches, enabled by industry developments, that could potentially bring large efficiency gains in the CMS computing model. These groups will enable new collaborators to get involved in the R&D topics of their interest using standardized tools and configurations. The Computing area plans to outline a list of projects for the upgrade in a kick-off workshop planned for June 2014 in Bologna.

by David Lange, Elizabeth Sexton-Kennedy, Peter Elmer, Maria Girone and Daniele Bonacorsi

- Log in to post comments