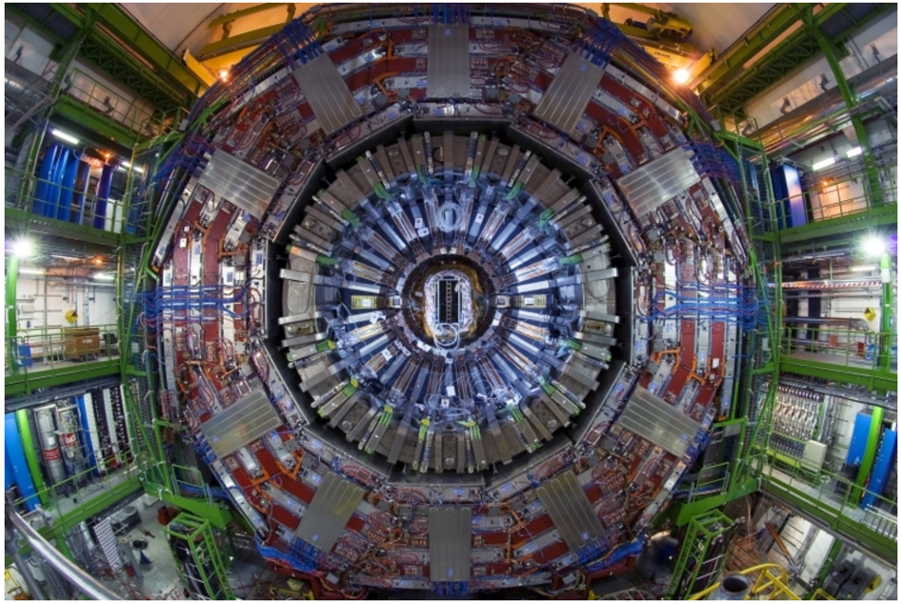

The CMS experiment employs a new machine-learning algorithm to improve the reconstruction of invisible particles.

How can one measure particles that escape the detector without interaction? This is the challenge for a key fundamental particle, the neutrino, which is produced in decays of various heavy particles, such as top quarks, b quarks, and W bosons. At CMS, we chase such invisible particles through an imbalance in the momenta of the visible particles. The so-called missing transverse momentum, or MET, is then assigned to the invisible particles. MET is also one of the most important clues in searches for the elusive dark matter. Now, thanks to a powerful new algorithm called DeepMET, CMS physicists have improved their ability to detect this invisible energy.

Accurate MET reconstruction is vital, but challenging. MET is inferred from all the visible particles in the detector, and their intrinsic individual resolutions lead to larger potential mismeasurement when summed, causing a large variance in the MET estimation. In addition, standard MET estimators, including PF and PUPPI MET, suffer from degradation in the challenging environment at the LHC, where each beam crossing can produce dozens of simultaneous proton-proton collisions (also known as “pileup”). DeepMET is designed to maintain a robust performance under these harsh conditions.

Smarter MET with deep learning

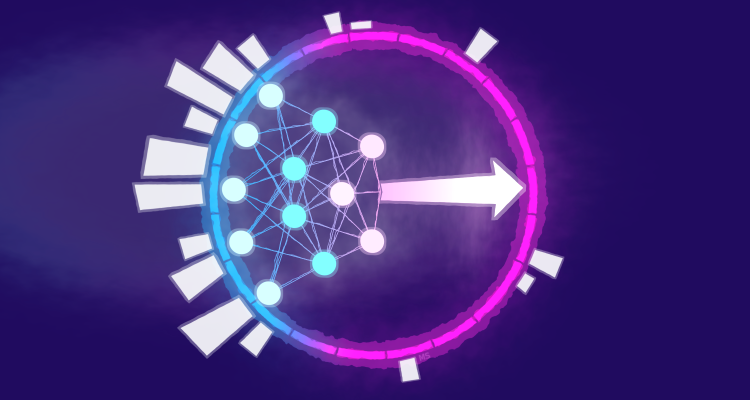

DeepMET employs a deep neural network (DNN) trained to optimally estimate the true MET based on the properties of the reconstructed particles, such as the particle type, its electric charge, and its momentum.

In order to deal efficiently with the thousands of particles produced in a single collision at the LHC, we train the network to give us the optimal weight for each particle entering the MET calculation, and sum up their weighted momenta afterwards. This leads to a network size of about 4500 trainable parameters, which is extremely compact by today’s standards and can be trained easily on a single graphics card. The simulated training dataset includes a mix of Z→μμ and top quark pair events to cover a broad range of event topologies.

Figure 1: The DeepMET network architecture. The neural network takes features of all particles as inputs, calculates individual weights, and estimates the MET as the sum of weighted momenta.

Performance that stands out

So, how well does it perform? DeepMET was tested on both simulations and actual Run 2 collision data at 13 TeV, and the results are impressive:

- In clean, well-understood events like Z→μμ, DeepMET shows a significant improvement in MET resolution with respect to the previous algorithms.

- Even as the pileup increases, DeepMET maintains a stable and reliable performance.

- The performance gains transfer well to other physics processes including the production of W bosons, Higgs bosons, or supersymmetric dark matter candidates.

These improvements mean that physicists searching for new physics, especially those involving signals with invisible particles, will have a clearer, more accurate picture of what’s going on.

Figure 2: MET resolution in Z boson events plotted vs the number of interaction vertices in LHC beam crossings. The lower and flatter, the better!

Ready for physics

DeepMET is now integrated in the CMS software framework and available for use in physics analyses. In fact, CMS already used it in the measurement of the W boson mass a few months ago, which achieved a world-leading precision of close to 0.01%! This is a powerful example demonstrating that machine learning is advantageous to high-precision analyses.

As we move toward the high-luminosity era of the LHC, tools like DeepMET will help us to reconstruct the momenta of invisible particles, even when buried under other collisions, and possibly reveal something new!

Written by: Markus Seidel and Yongbin Feng, for the CMS Collaboration

Edited by: Andrés G. Delannoy

Read more about these results:

-

CMS Physics Analysis Summary (JME-24-001): "DeepMET: Improving missing transverse momentum estimation with a deep neural network"

-

@CMSExperiment on social media: Bluesky - Facebook - Twitter - Instagram - LinkedIn - TikTok - YouTube