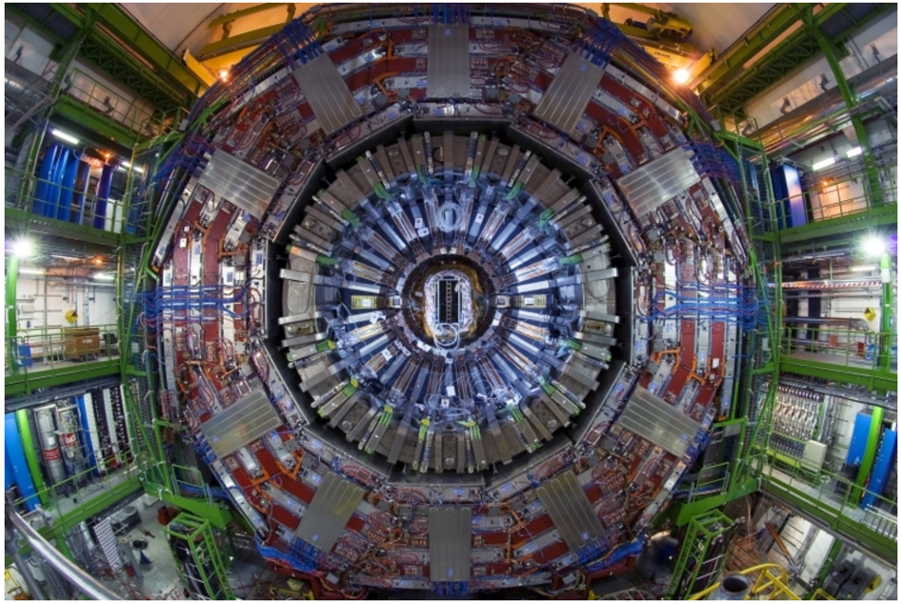

The Large Hadron Collider (LHC) at CERN is gradually restarting and in the summer bunches of protons will collide again at high energy, marking the start of Run 3.

Is CMS ready to manage the big amount of new data? The CMS Data Acquisition and the High Level Trigger systems underwent massive refurbishment during the past three years.

The LHC proton beams cross each other 40 million times each second at CMS, producing approximately 1MB of data per collision. That is a whopping 40 Terabytes of data each second!

It is technically impossible to read out and store all this data: only a small fraction can be while the rest is lost forever. The very first selection is performed by the Level 1 Trigger that reduces the rate of collisions to analyse to about 100000 per second.

The Data Acquisition (DAQ) system plays the essential role of collecting, inspecting and assembling the data selected by the L1 Trigger. It operates the not-easy task of grouping the data coming from a single collision event together, like organising a big pile of pieces of different puzzles into their original box, and finally sends all the information to the High Level Trigger (HLT). DAQ is also the place where the complete picture of the detector response can be monitored, providing early feedback to physicists running the CMS experiment.

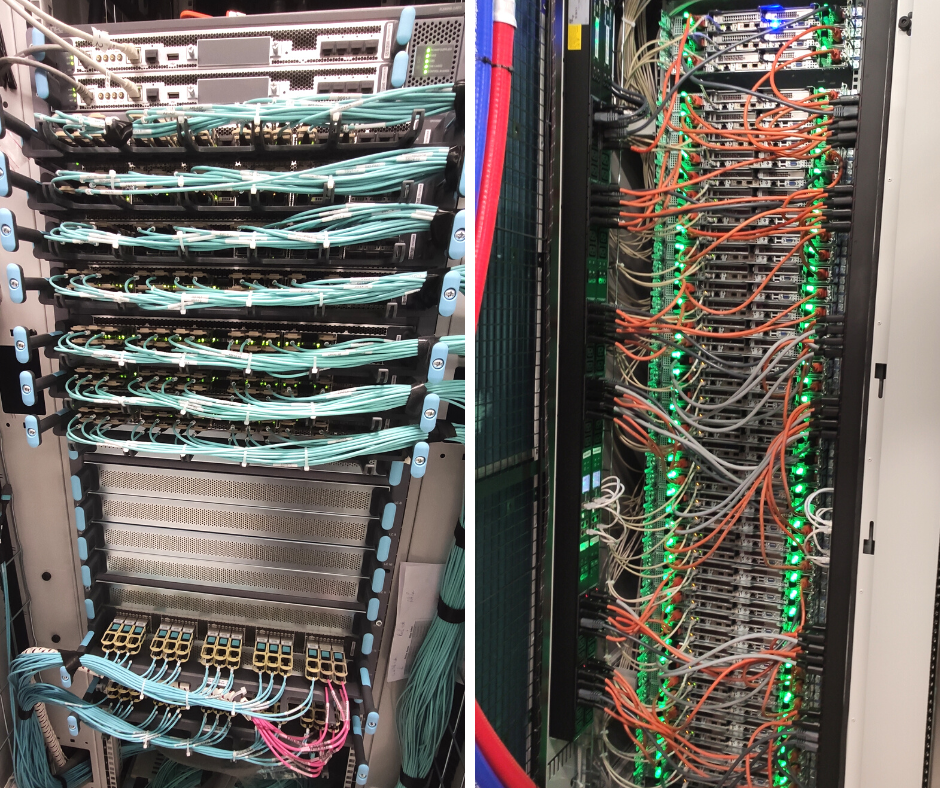

During the Long Shutdown 2, the DAQ system has been refurbished with new technology to allow the overall system to be more compact, less expensive, and more efficient at the same time. The upgrade includes the replacement of all switched networks connecting the detector to the DAQ processing stages, all the way to the HLT farm: this means from the experimental cavern, 100 meters underground, to the surface server room. Moreover, new servers for event assembly, where the information about a unique collision event is assembled for the first time, have been installed.

Right: A network switch cabinet connecting all servers with the DAQ event builder

Left: A rack full of HLT servers - Credits: Attila Racz

The HLT is the first system where the entire information from collisions can be inspected: this information is used to perform a further selection of the events. HLT basically consists of a series of servers that execute sophisticated algorithms in order to select only the most important and relevant physics data. If the HLT “decides” an event is not worthy, then it is lost forever. At the end of the full process, only a few thousand events per second (out of the initial 40 million) are archived for full offline reconstruction, and later analysed by scientists.

The Long Shutdown 2 has been a period of extensive refurbishment also for the HLT with the replacement of the old farm with 246 new servers. Promptly installed in their racks, all the servers underwent stress tests for entire days in order to check their perfect functioning at 100% of their maximum capacity.

Of the overall amount, 200 servers have been assigned for the HLT, renewing it with 25’600 CPU cores in total and 400 new GPUs, two GPUs per server. The remaining 46 servers have been assigned for the Data Quality Monitor, the development of new algorithms and for future tests.

The CPU installed in the new servers are over four times more powerful than the previous generation, allowing the new farm to use less than half the space than in Run 2 for 90% of the CPU power. Thanks to the additional computation power provided by the GPUs however, the farm is 35% more powerful than in the previous Run.

The new HLT farm was commissioned during the first collisions at 900GeV at the LHC.

What about the old server system, still serviceable, that will be decommissioned?

Nothing will be turned off but used as a permanent online cloud, providing additional CPU capacity for the offline processing of CMS data.