What do you do when you would like to play the newest computer game on your old computer, but the game is too demanding for your hardware? You own the previous version of the game and it was fun, but over the years it has grown long in the tooth and is outdated. If you can’t afford to buy a new computer, you may not be able to enjoy the new game and you may well be stuck with the old version.

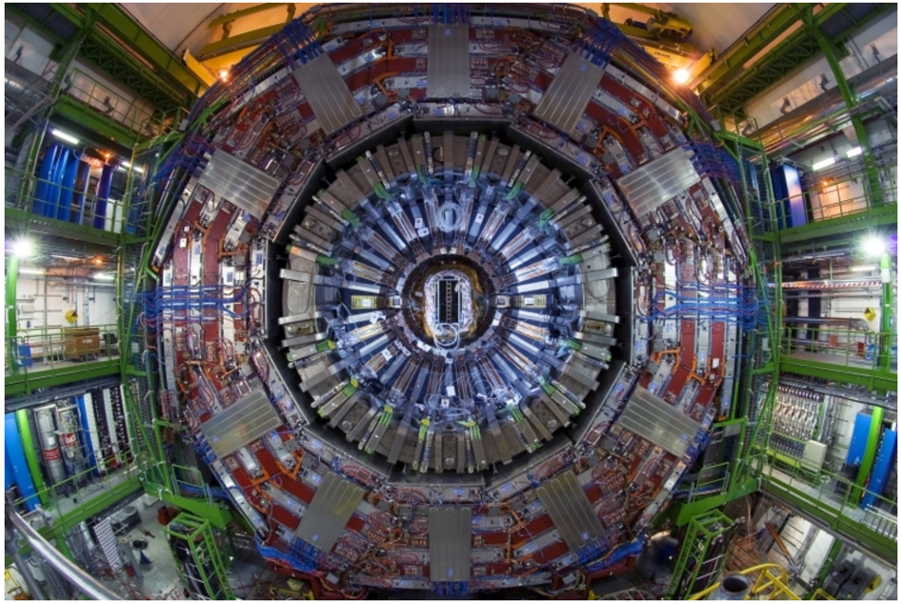

At the LHC, we have a similar problem but at a much larger scale. The experimental collaborations are operating some of the largest scientific computing infrastructures in the world, but we are still limited by the available computing resources. The high computing demands come from the need to process the collision data recorded by our detectors and to produce detailed simulations of the underlying physics. Only by comparing the recorded data with our expectations from simulations based on our current best understanding of the quantum world, can we gain knowledge and improve our description of nature. After achieving a breakthrough, we need to update all simulations to reflect this progress and allow for new insights. With the successful operation of the LHC, more data is being recorded every day by the experimental collaborations, which also means that our demand for simulated data increases by the day. Current estimates predict that we will need about 150 billion simulated events per year after the High-Luminosity LHC begins operation in 2029. Even if we consider an increase in our computing resources following our past experience and the expected technology improvements, the shortfall between our needs and future resources will be a factor of 4 in CPU and a factor of 7 in disk storage. However, for scientists, being stuck with the old simulations is not an option because it jeopardizes our future research capabilities. We need to come up with a solution that enables us to use the newest physics models with the limited resources we have.

This is where new methods based on machine learning (ML) can help. In the last few years, ML methods have been developed to change the properties of simulated samples so that they are equivalent to samples simulated with different parameters or different underlying models. The CMS Collaboration has employed one of these methods and studied how well it does when applied to simulated samples that we use in our data analyses. We have found that a given sample can mimic another sample with high accuracy in all relevant aspects.

The significance of this approach is that now it is sufficient to have just one single simulated sample and the ML algorithm can emulate different simulations from it. The detector simulation and event reconstruction have to be computed only once for the one single sample. Since these two steps require about 75% of the computing resources for each simulated sample, the gain is considerable. Valentina Guglielmi from DESY, the leading author of this study, says “Imagine that you run the old version of your computer game on your old hardware, but it looks and feels like the newest version. The ML model simulates the new game in the background, without a performance penalty. Since there is no visible difference in sound and graphics, you are actually playing the new version of the game without the need to buy a new computer.”

Here is how the new method works: First, a large sample of simulated data is produced using a given physics model. This sample undergoes a detailed detector simulation, which is computationally expensive. Then, additional, smaller samples are produced based on different models in order to train the ML algorithm. Unlike the central sample, these do not require the detailed detector simulation and event reconstruction. The ML model is trained to compute weights for each simulated event, which can then be applied to the central simulated sample to imitate the alternative simulations. In this new study, the CMS Collaboration shows that the ML method can be applied effectively to simulated samples of top quark pair production. It is essential to produce only one sample instead of several, where the additional samples are typically used to compute systematic uncertainties in our measurements. This significantly reduces the computational cost.

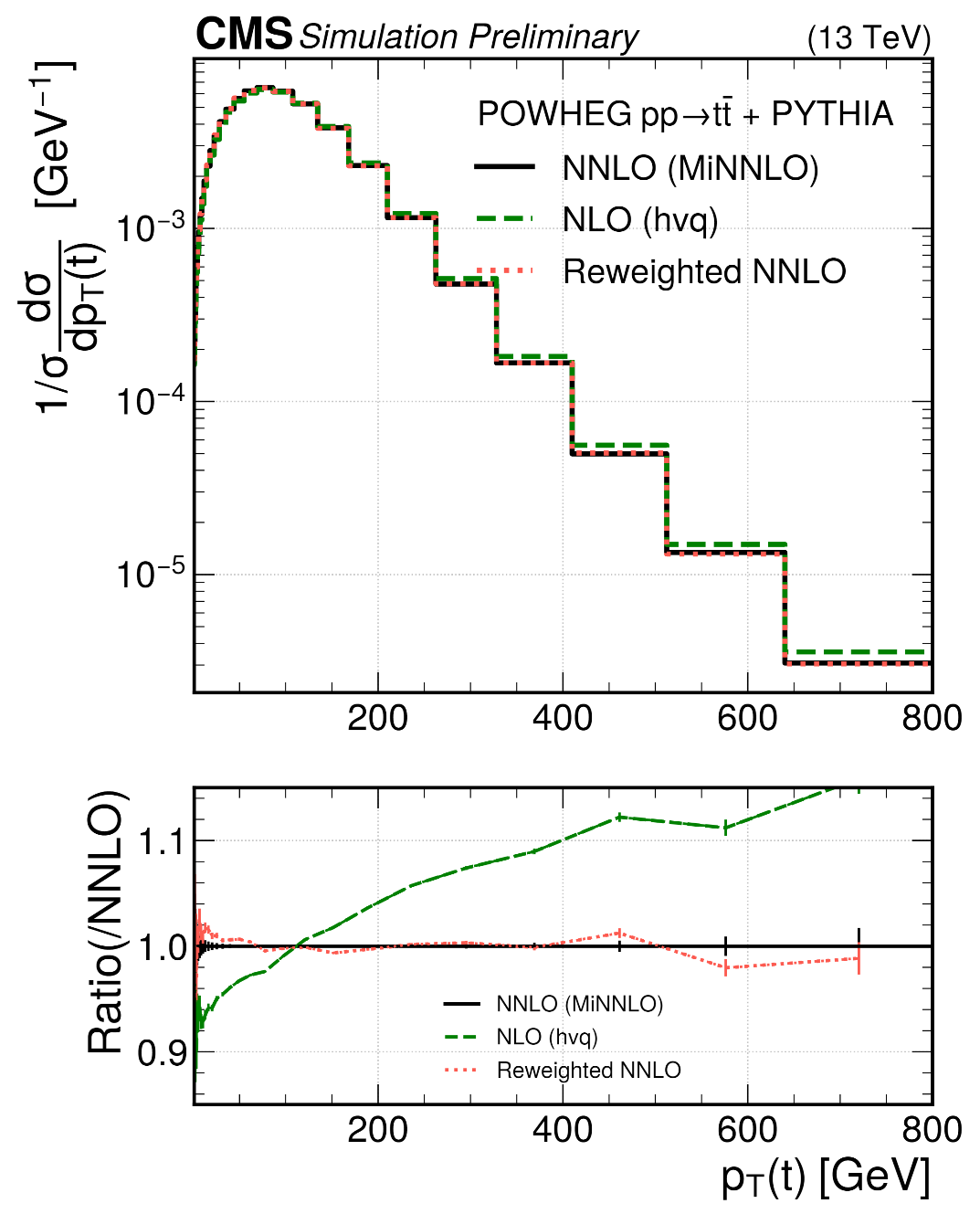

The ML approach has also been applied to mimic a more accurate simulation program from the less accurate central sample, thus showing the possibility of updating our existing simulations to reflect new theoretical progress. This result is shown in Figure 1, which shows distributions in the transverse momentum of top quarks produced at the LHC, from two different simulation programs (named NLO, for next-to-leading order, and NNLO for next-to-next-to-leading order). In the transverse momentum distribution, the more accurate NNLO simulation differs from the NLO simulation by up to 20%. Applying the ML algorithm to the NLO sample, the result mimics the NNLO sample very accurately. The huge advantage of this new method is that it is able to change all relevant features simultaneously which we have never been able to achieve with other methods.

“The developed method is versatile and user-friendly, making it straightforward for researchers to integrate into their analyses,” says Valentina Guglielmi. This innovative approach helps to reduce the needed computing resources for the production of simulated samples, crucial for the future success of the LHC research programme.

Figure 1: Transverse momentum distribution of top quarks simulated from less accurate NLO (green) and more accurate NNLO (black). The ML approach (red) re-weights the NLO sample to accurately mimic the NNLO.

Edited by: Andrés G. Delannoy

Read more about these results:

-

CMS Paper (MLG-24-001): "Reweighting of simulated events using machine learning techniques in CMS"

-

@CMSExperiment on social media: Bluesky - Facebook - Instagram - LinkedIn - TikTok - Twitter/X - YouTube