The 2019 edition of the International Conference on Computing in High Energy and Nuclear Physics (CHEP, chep2019.org) was held on 4–8 November in Adelaide (South Australia). The conference organizers, the University of Adelaide, welcomed over 500 participants.

The CHEP 2019 programme of the plenary sessions spanned across High Energy Physics, molecular science, gravitational wave astrophysics and quantum computing. Moreover, a number of diversity-oriented initiatives took place, such as a diversity panel discussion with the main subject "What can we, as individuals, do to improve inclusiveness and increase diversity in our field?”, as well as a diversity plenary presentation.

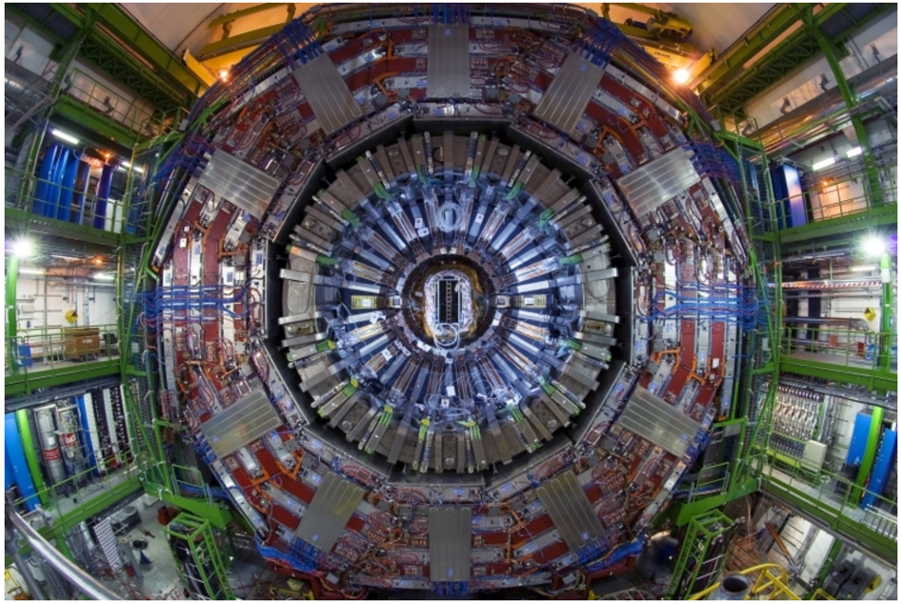

The conference was also an occasion to engage local audiences and explain a little about what we do. The Universal Science public event (universalscience.web.cern.ch), supported by the University of Adelaide, CERN and IPPOG, opened the university doors on Sunday evening, allowing people from the surrounding region to experience hands-on exhibits from CMS, ATLAS, and Belle II. The visitors could also participate in short presentations on particle physics and high performance distributed computing, followed by an open panel discussion on current and future possibilities in each area.

This article will highlight major trends that were discussed throughout the week on multiple parallel sessions.

Online and real-time computing

The CMS collaboration presented the ongoing effort to reconstruct the decays of 10 billion B mesons. This unprecedented data set was delivered in 2018 with negligible impact on the core physics programme of CMS by “parking” the events - storing them in raw format to be fully reconstructed later. The entire data set is currently being reconstructed and will be ready soon for a detailed analysis that will allow physicists to study B meson physics and probe the anomalies in the lepton flavour sector.

For the detector upgrade for HL-LHC, track candidates will have to be formed at 40 MHz to improve the real-time event selection and maintain the physics capabilities under the most challenging particle densities and data rates. CMS demonstrated that by offloading the most time consuming part of the online event reconstruction, i.e. pixel-based tracking, from a CPU to a GPU, the event processing speed is doubled and better physics performance is achieved. This upgrade is aimed for deployment starting from Run 3, when each node in the High Level Trigger (HLT) farm will be equipped with 1 Tesla T4 GPU. CMS has also ported the track reconstruction to Field-Programmable Gate Arrays (FPGAs). This solution is becoming a growing interest in parallel with GPUs.

Offline Computing

Machine Learning techniques are being tested by the experiments in many sectors, including reconstruction and particle identification. Image techniques work well for simple geometries, and graph/interaction networks look promising for more complicated ones.

Parallelization is powerful. The TrackML Tracking Challenge has shown that breaking data into small portions makes the problem simpler and fast, and lots of simple problems are still fast enough to be solved. In addition, a lot of work is ongoing to speed up simulations. Together with improvements in fast simulations, using Machine Learning for generative simulations shows promising results. Not only CPU but also memory savings are valuable, and experiments are working in this direction.

Middleware and Distributed Computing

CMS presented results on the scalability of our computing infrastructure towards the HL-LHC era. We learned that the challenges are different operational policies and architectures for each High Performance Computing (HPC) facility.

HPC sites, such as NERSC or BCS, have diverse security policies and different levels of flexibility in installing organization-specific software, and networking connectivity, for example. While Grid resources are largely homogenous in their connectivity, HPC’s represent a challenge for experiments to run computing workflows in such versatile environments.

Facilities, Clouds, and Containers

The common analysis tools in High Energy Physics (HEP) used to be based on software products that were entirely developed within the community. However nowadays the data science industry develops power analysis tools that have large use base across various domains. Tools like Jupyter notebooks are rather well tailored to analysis in HEP. Therefore more and more particularly younger scientists try to adopt those tools for their research.

The Hepix TechWatch working group follows the developments and trends in the IT market and in the road maps of various vendors. The group is mainly focused on hardware components like CPUs, disk drives, SSDs, tapes and network equipment. These insights often give guidelines for sites when it comes to procurements or planning of the data center infrastructure at participating HEP sites. According to Hepix TechWatch, AMD is now offering competitive CPUs in price, performance and power consumption, after Intel dominated the CPU market for many years. The market for magnetic hard drives is shrinking and for tapes there essentially only one company left. This lack of competition is seen as a worry, since such situation usually translates to higher prices and slower product development. In contrast to that, the area of Ethernet networks is very dynamic and further increases of bandwidth are expected.

Collaboration, Training, Education, Outreach

Collaboration, training, education and outreach had its own place among conference subjects, for the first time at CHEP. Outreach and Education (O&E) was viewed as a strategic aim of any publicly funded science organisation and its departments, making it possible to build people’s trust in publicly funded research as well as to establish solid support from the policy makers for the continuation of HEP research. The presentations revolved around the importance of passing the knowledge and inspiring young people to pursue careers in Science, Technology, Engineers and Mathematics (STEM).

Collaborative tools are expanding, e.g. collaboration with CS3 - distributed cloud system - will offer research labs and universities a global, collaborative environment for Data Science, Education and Outreach. A few examples of new training software development were shown and a reflection on the future community-wide solution for training was raised. The competencies portfolio of successful HEP researchers has expanded over the past years. The demand for HEP-oriented education triggered many initiatives (e.g. university courses) that are closing the gap between what students are taught and the knowledge required while working in our field. Training needs of our community are also evolving and it’s pivotal to adapt to this situation.

The CERN Open Data Portal is expanding, the amount of data is increasing every year. The recent CMS Open Data release brings the volume of its open data to more than 2 PB (or two million GB). CMS has now provided open access to 100% of its research data recorded in proton–proton collisions in 2010. It originally targeted educational use but the scope has now been extended as there is more research being done based on these data.

Exascale Science

Several ongoing efforts were presented for increasing and optimizing the usage of computing resources available at friendly HPC centers in many countries. This requires adapting the experiments’ workflows and developing tools to comply to the specific constraints imposed by each of those centers or selecting the centers where to run the most suitable workflow for each particular experiment. In addition, several contributions focused on the work being done to exploit computing accelerators, which are typically present in HPC environments.

Crossover from online, offline and exascale

Interesting developments common to online and offline software were also discussed. Notably, the Fast Machine Learning Lab demonstrated that jet tagging could be accomplished at great speed using industry neural networks and a single Field Programmable Gate Array (FPGA) chip, bucking the trend of acceleration through parallel computing seen elsewhere at the conference. Meanwhile the Tracking ML Challenge provided reassuring evidence that “biological neural networks” with decades of training (i.e. physicists) could still outperform machines, with human-designed algorithms beating pure ML when optimising for speed and accuracy.

- Log in to post comments